Large Language Model Text Interaction

Large language model text interaction refers to the process of information exchange and interaction between users and large language models in text form.

Principle

- Language Understanding: Large language models, based on deep learning technologies such as the Transformer architecture, can understand the semantics, grammar, and logical relationships in text through learning from vast amounts of text data. The model converts the input text into vector representations, performing feature extraction and analysis in multiple layers of neural networks to capture the deep meanings of the text.

- Generating Responses: Based on the understanding of the input text, the model generates response text by predicting the probability distribution of the next word or character according to its learned language knowledge and patterns. The model considers contextual information, language habits, and semantic coherence to generate reasonable, fluent, and meaningful responses.

Interaction Methods

- Conversational Interaction: Users pose questions, request suggestions, or engage in discussions with the large language model in text form, and the model responds and provides feedback in a conversational manner, similar to a dialogue between people. For example, if a user asks, "How can I improve my writing skills?", the model will provide relevant methods and suggestions.

- Text Generation Tasks: Users can set specific themes or requirements for the model, allowing it to generate various types of text, such as articles, stories, poems, or code. For instance, if a user requests, "Write an essay about spring," the model will generate an essay based on its understanding of "spring" and general literary creation rules.

- Knowledge Query and Acquisition: Users can treat the model as a knowledge repository, inputting specific queries to obtain relevant information and knowledge. For example, if a user queries, "What significant events happened on this day in history?", the model will respond based on its learned historical knowledge.

Running Example

The interaction method used by OriginMan is the large model gateway technology, which utilizes a knowledge base for content capture and knowledge base parsing in the background, ultimately sending the information encompassing the knowledge base to the user.

Turn on the OriginMan power, then start the following command in MoboXterm or Vscode:

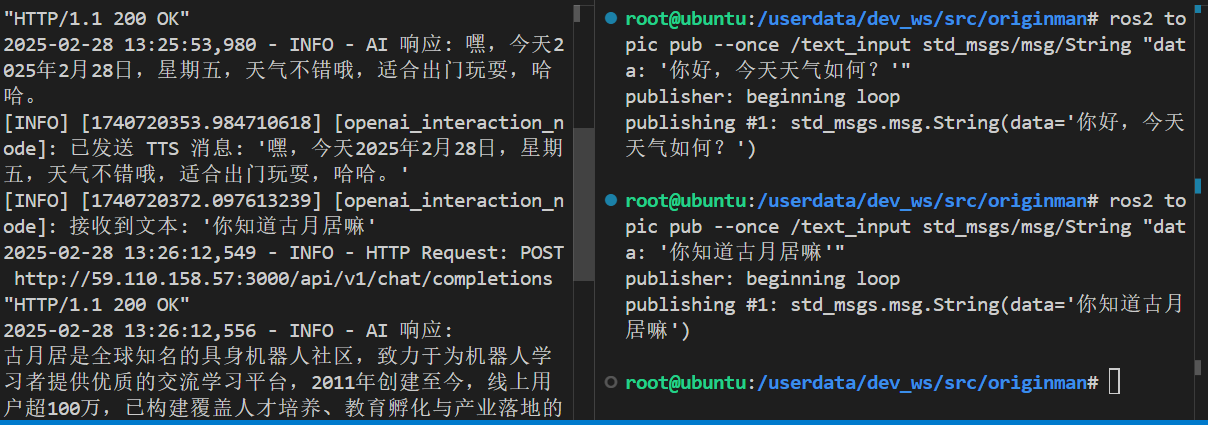

After running, you can see the following log output in the terminal:root@ubuntu:/userdata/dev_ws/src/originman# ros2 run originman_llm_chat llm_chat_node

[INFO] [1740719979.314563770] [openai_interaction_node]: OpenAI interaction node started, waiting for input/text_input topic data...

[INFO] [1740719979.316873645] [openai_interaction_node]: Usage example: ros2 topic pub --once /text_input std_msgs/msg/String "data: 'Hello, how's the weather today?'"

In another terminal, run the following command:

Attention

You need to complete the network connection operation first. Please refer to Network Configuration and Remote Development Methods for the connection steps.