Visual Line Following

Visual line following is a common scenario in the daily work of robots, often seen in warehouse environments or parks where line-following robots are at work.

This section will introduce how to implement line following using OriginMan.

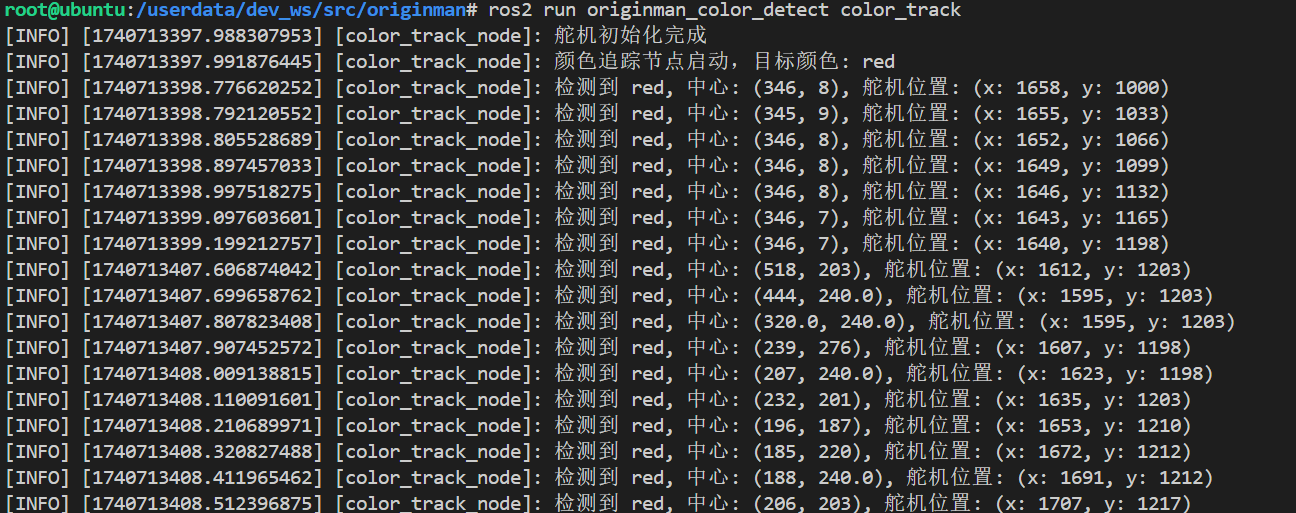

Example Run

You can stick a black line segment on the white map of the kit and place the OriginMan at the starting point of the black line segment.

Then turn on the OriginMan power, and you can start the following command in MoboXterm or Vscode:

After running, you will first see that the OriginMan will lower its head to observe whether there is a black line in its field of view. If there is, it will perform line following.

Attention

If there is no black line, you can also place a black data line in front of the OriginMan.

Code Implementation

The code is located at: originman_line_follower/line_follower.py

#!/usr/bin/env python3

# encoding:utf-8

import rclpy

from rclpy.node import Node

import cv2

from cv_bridge import CvBridge

from sensor_msgs.msg import Image

from std_msgs.msg import String

import numpy as np

import threading

import time

import math

import hiwonder.ros_robot_controller_sdk as rrc

from hiwonder.Controller import Controller

import hiwonder.ActionGroupControl as AGC

class LineFollowerNode(Node):

def __init__(self):

super().__init__('visual_patrol_node')

self.bridge = CvBridge()

# Create image publisher

self.image_pub = self.create_publisher(Image, 'image_raw', 10)

# Create line following information publisher

self.line_info_pub = self.create_publisher(String, 'line_info', 10)

# Open camera

self.cap = cv2.VideoCapture(0)

if not self.cap.isOpened():

self.get_logger().error("Unable to open camera")

return

# Robot control initialization

self.board = rrc.Board()

self.ctl = Controller(self.board)

self.servo_data = {'servo1': 891, 'servo2': 1661}

# Initialize servo position

self.init_move()

# Line following related parameters

self.target_color = 'black' # Default to track black line

self.size = (640, 480)

self.img_centerx = self.size[0] / 2

self.line_center_x = -1

# ROI and weights

self.roi = [

(240, 280, 0, 640, 0.1), # [ROI, weight]

(340, 380, 0, 640, 0.3),

(440, 480, 0, 640, 0.6)

]

self.roi_h1 = self.roi[0][0]

self.roi_h2 = self.roi[1][0] - self.roi[0][0]

self.roi_h3 = self.roi[2][0] - self.roi[1][0]

self.roi_h_list = [self.roi_h1, self.roi_h2, self.roi_h3]

# Color range

self.lab_data = {

'black': {'min': [0, 166, 135], 'max': [255, 255, 255]}

}

# Timer for image processing and publishing

self.timer = self.create_timer(0.1, self.process_image)

# Start action control thread

self.action_thread = threading.Thread(target=self.move)

self.action_thread.daemon = True

self.action_thread.start()

self.get_logger().info("Visual line following node started, target color: {}".format(self.target_color))

def init_move(self):

"""Initialize servo position"""

self.ctl.set_pwm_servo_pulse(1, self.servo_data['servo1'], 500)

self.ctl.set_pwm_servo_pulse(2, self.servo_data['servo2'], 500)

self.get_logger().info("Servo initialization completed")

def get_area_max_contour(self, contours):

"""Find the largest contour by area"""

max_area = 0

max_contour = None

for c in contours:

area = math.fabs(cv2.contourArea(c))

if area > max_area and area > 5: # Minimum area threshold

max_area = area

max_contour = c

return max_contour, max_area

def line_detect(self, img):

"""Line detection"""

img_h, img_w = img.shape[:2]

frame_resize = cv2.resize(img, self.size, interpolation=cv2.INTER_NEAREST)

frame_gb = cv2.GaussianBlur(frame_resize, (3, 3), 3)

centroid_x_sum = 0

weight_sum = 0

center_ = []

n = 0

for r in self.roi:

roi_h = self.roi_h_list[n]

n += 1

blobs = frame_gb[r[0]:r[1], r[2]:r[3]]

frame_lab = cv2.cvtColor(blobs, cv2.COLOR_BGR2LAB)

mask = cv2.inRange(frame_lab,

tuple(self.lab_data[self.target_color]['min']),

tuple(self.lab_data[self.target_color]['max']))

eroded = cv2.erode(mask, cv2.getStructuringElement(cv2.MORPH_RECT, (3, 3)))

dilated = cv2.dilate(eroded, cv2.getStructuringElement(cv2.MORPH_RECT, (3, 3)))

dilated[:, 0:160] = 0 # Mask left side

dilated[:, 480:640] = 0 # Mask right side

cnts = cv2.findContours(dilated, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_TC89_L1)[-2]

cnt_large, area = self.get_area_max_contour(cnts)

if cnt_large is not None:

rect = cv2.minAreaRect(cnt_large)

box = np.int0(cv2.boxPoints(rect))

for i in range(4):

box[i, 1] = box[i, 1] + (n - 1) * roi_h + self.roi[0][0]

box[i, 1] = int(box[i, 1] * img_h / self.size[1])

box[i, 0] = int(box[i, 0] * img_w / self.size[0])

cv2.drawContours(img, [box], -1, (0, 0, 255, 255), 2)

pt1_x, pt1_y = box[0, 0], box[0, 1]

pt3_x, pt3_y = box[2, 0], box[2, 1]

center_x, center_y = (pt1_x + pt3_x) / 2, (pt1_y + pt3_y) / 2

cv2.circle(img, (int(center_x), int(center_y)), 5, (0, 0, 255), -1)

center_.append([center_x, center_y])

centroid_x_sum += center_x * r[4]

weight_sum += r[4]

if weight_sum != 0:

self.line_center_x = int(centroid_x_sum / weight_sum)

cv2.circle(img, (self.line_center_x, int(center_y)), 10, (0, 255, 255), -1)

self.get_logger().info(f"Detected black line center: {self.line_center_x}")

else:

self.line_center_x = -1

return img

def move(self):

"""Robot action control thread"""

while True:

if rclpy.ok():

if self.line_center_x != -1:

if abs(self.line_center_x - self.img_centerx) <= 50:

AGC.runActionGroup('go_forward')

self.get_logger().info("Moving forward along the black line")

elif self.line_center_x - self.img_centerx > 50:

AGC.runActionGroup('turn_right_small_step')

self.get_logger().info("Adjusting to the right")

elif self.line_center_x - self.img_centerx < -50:

AGC.runActionGroup('turn_left_small_step')

self.get_logger().info("Adjusting to the left")

else:

time.sleep(0.01)

else:

time.sleep(0.01)

def process_image(self):

"""Process image and publish"""

ret, frame = self.cap.read()

if not ret:

self.get_logger().error("Unable to read image frame")

return

# Line detection

frame = self.line_detect(frame)

line_msg = String()

line_msg.data = f"Line Center X: {self.line_center_x}"

self.line_info_pub.publish(line_msg)

# Publish image

self.image_pub.publish(self.bridge.cv2_to_imgmsg(frame, "bgr8"))

def __del__(self):

if self.cap.isOpened():

self.cap.release()

def main(args=None):

rclpy.init(args=args)

node = LineFollowerNode()

try:

rclpy.spin(node)

except KeyboardInterrupt:

node.get_logger().info("Node interrupted")

node.destroy_node()

rclpy.shutdown()

if __name__ == '__main__':

main()

Switching Colors

In addition to black, you can also choose to use the following colors: red, green, and blue for recognition. Just switch the corresponding parameters in the code.